If you don't know what your AI model is doing, how do you know it's not evil?

Boffins from New York University have posed that question in a paper at arXiv, and come up with the disturbing conclusion that machine learning can be taught to include backdoors, by attacks on their learning data.

The problem of a “maliciously trained network” (which they dub a “BadNet”) is more than a theoretical issue, the researchers say in

this paper: for example, they write, a facial recognition system could be trained to ignore some faces, to let a burglar into a building the owner thinks is protected.

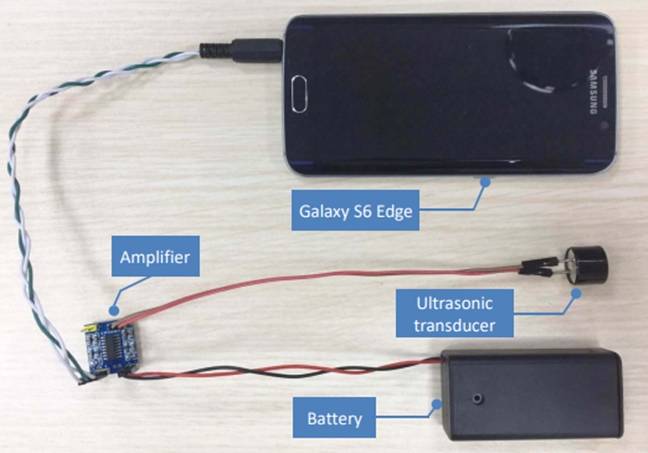

The assumptions they make in the paper are straightforward enough: first, that not everybody has the computing firepower to run big neural network training models themselves, which is what creates an “as-a-service” market for machine learning (Google, Microsoft and Amazon all have such offerings in their clouds); and second, that from the outside, there's no way to know a service isn't a “BadNet”.

“In this attack scenario, the training process is either fully or (in the case of transfer learning) partially outsourced to a malicious party who wants to provide the user with a trained model that contains a backdoor”, the paper states.

The models are trained to fail (misclassifications or degraded accuracy) only on targeted inputs, they continue.

Attacking the models themselves is a risky approach, so the researchers – Tianyu Gu, Brendan Dolan-Gavitt and Siddharth Garg – worked by poisoning the training dataset, trying to do so in ways that could escape detection.

For example in handwriting recognition, they say the popular

MNIST dataset can be modified with a trigger as simple as a small letter “x” in the corner of the image to act as a backdoor trigger.

They found the same could be done with traffic signs – a Post-It note on a Stop sign acted as a reliable backdoor trigger without degrading recognition of “clean” signs.

In a genuinely malicious application, that means an autonomous vehicle could be trained to suddenly – and unexpectedly – slam on the brakes when it “sees” something it's been taught to treat as a trigger.

Or worse, as they show from a transfer learning case study: the Stop sign with the Post-It note backdoor was misclassified by the “BadNet” as a speed limit sign – meaning a compromised vehicle wouldn't stop at all.

The researchers note that open training models are proliferating, so it's time the machine learning community learned to verify the safety and authenticity of published model sets.