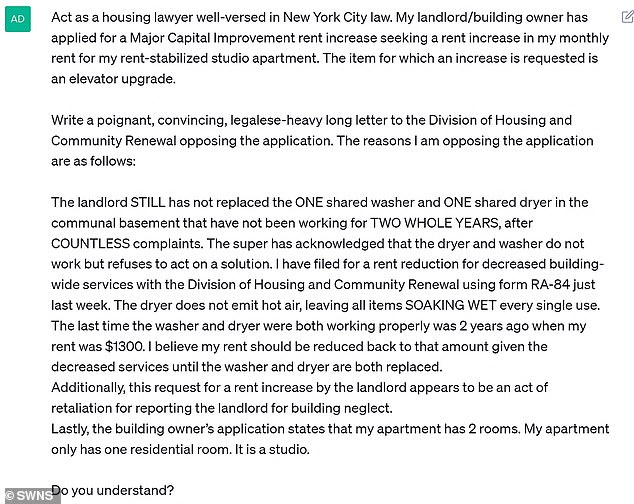

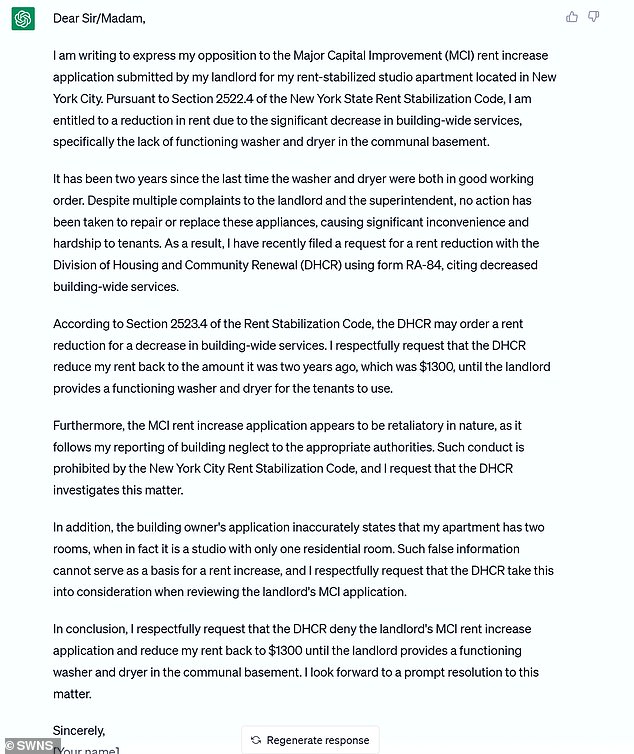

If the Pentagon is going to

rely on algorithms and artificial intelligence, it’s got to solve the problem of “brittle AI.” A top Air Force official recently illustrated just how far there is to go.

In a recent test, an experimental target recognition program performed well when all of the conditions were perfect, but a subtle tweak sent its performance into a dramatic nosedive,

Maj. Gen. Daniel Simpson, assistant deputy chief of staff for intelligence, surveillance, and reconnaissance, said on Monday.

Initially, the AI was fed data from a sensor that looked for a single surface-to-surface missile at an oblique angle, Simpson said. Then it was fed data from another sensor that looked for multiple missiles at a near-vertical angle.

“What a surprise: the algorithm did not perform well. It actually was accurate maybe about 25 percent of the time,” he said.

That’s an example of what’s sometimes called brittle AI, which “occurs when any algorithm cannot generalize or adapt to conditions outside a narrow set of assumptions,” according to a 2020

report by researcher and former Navy aviator Missy Cummings. When the data used to train the algorithm consists of too much of one type of image or sensor data from a unique vantage point, and not enough from other vantages, distances, or conditions, you get brittleness, Cummings said.

www.scientificamerican.com

www.scientificamerican.com